Build scenes. Train AI. Run them anywhere.

OpenFluke is a unified playground for physics scenes, native and web runtimes, and AI behaviors. Design once, deploy to Biocraft (web) or Primecraft (native) with identical JSON assets.

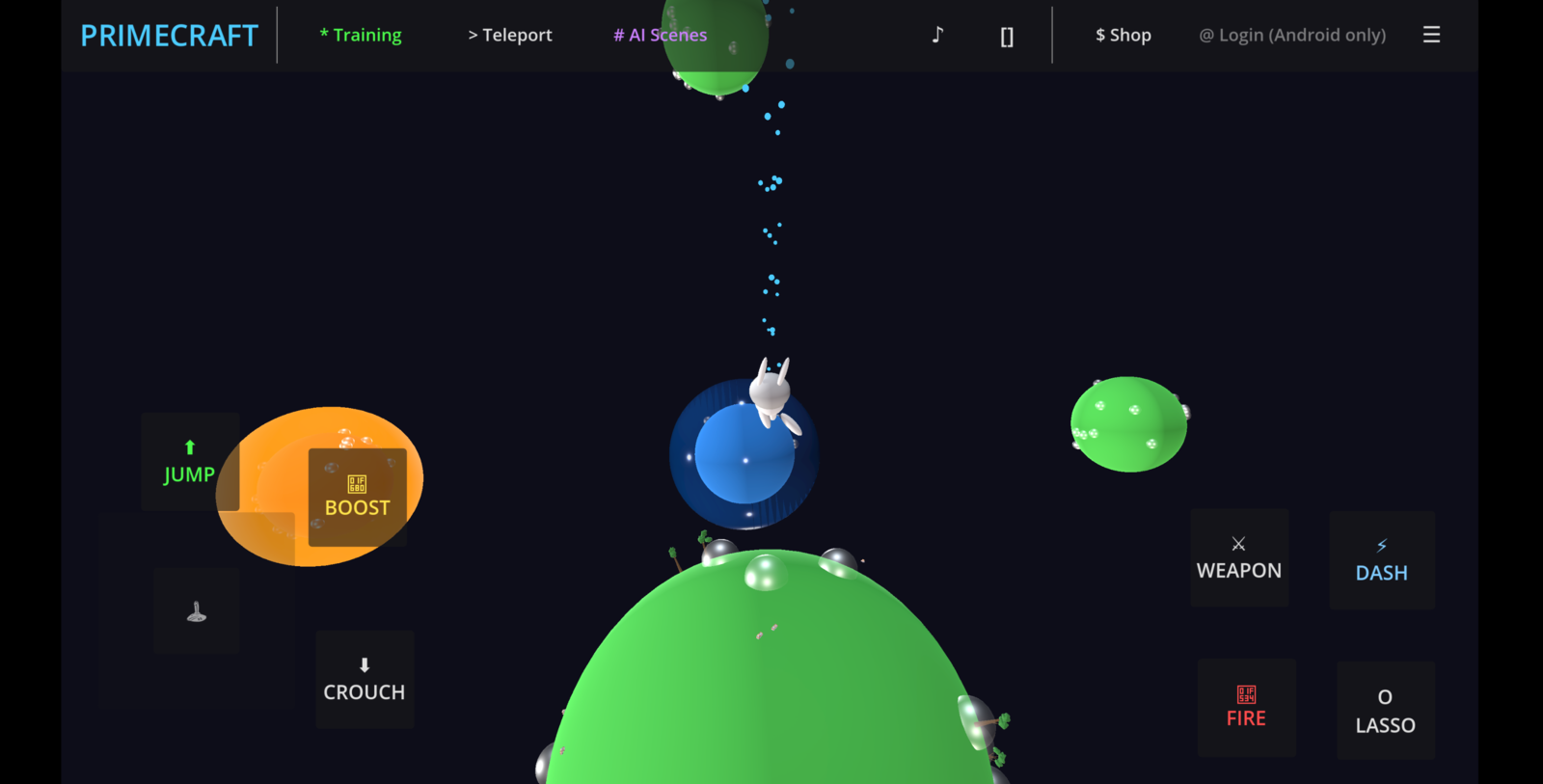

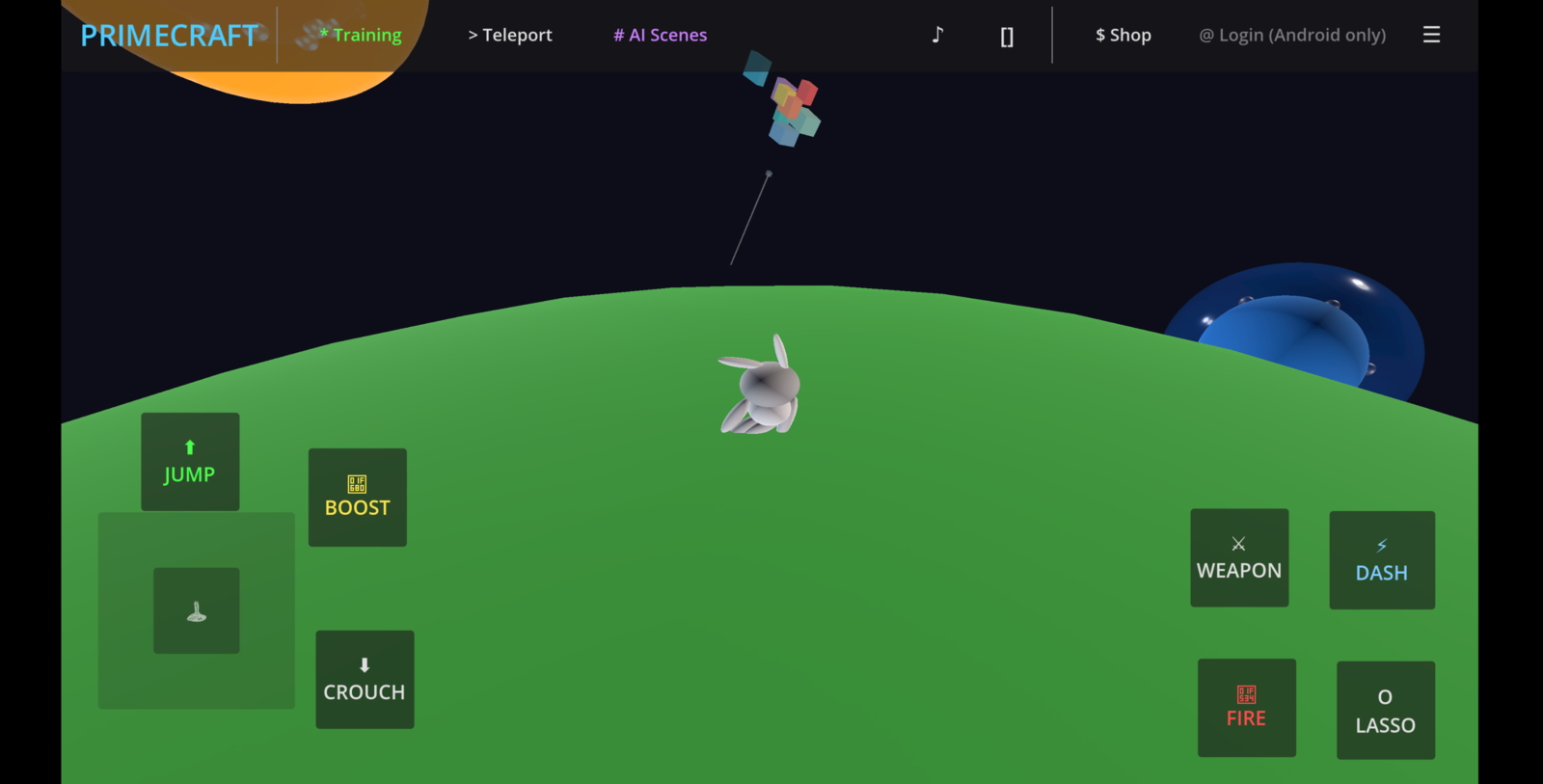

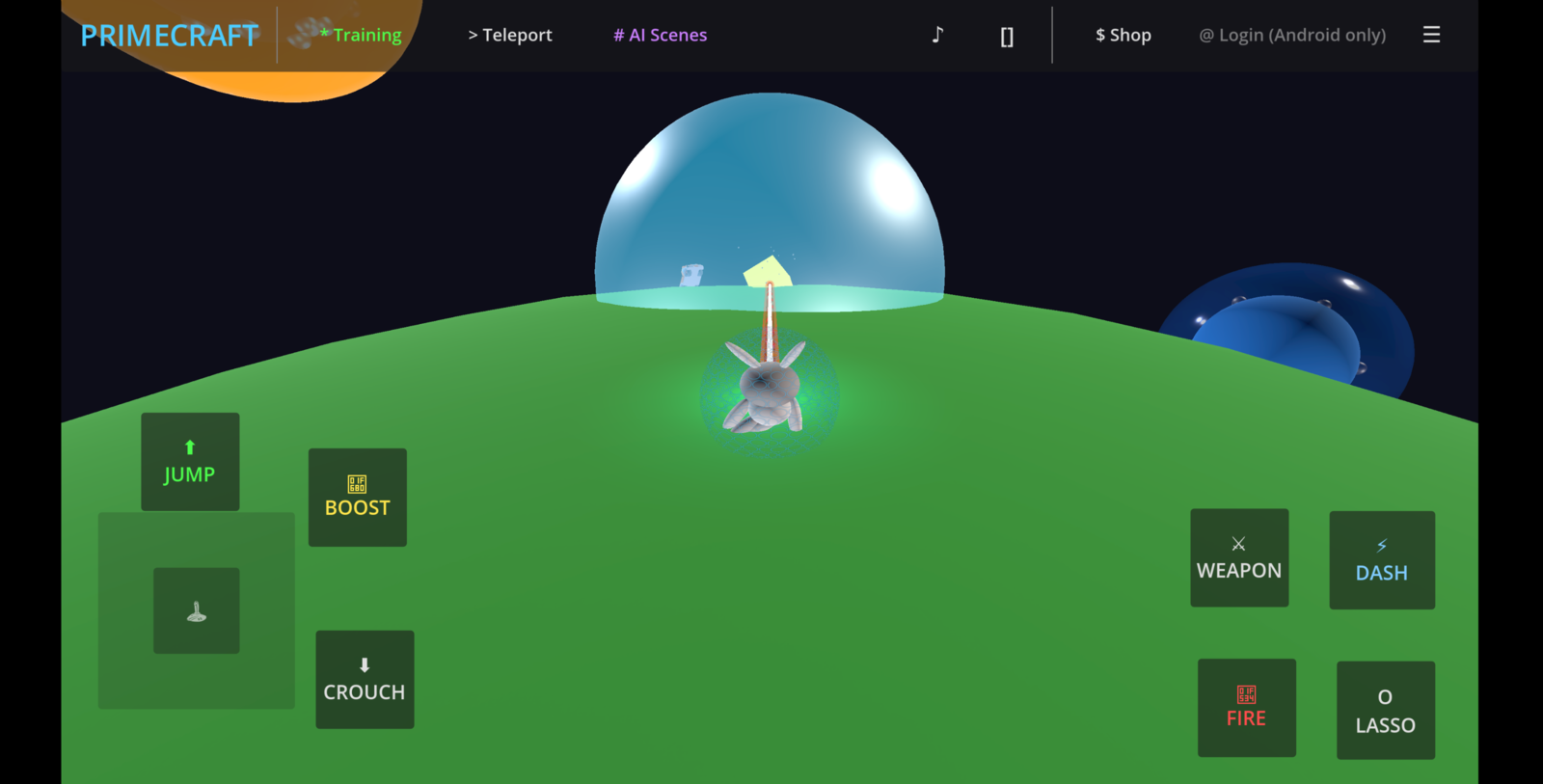

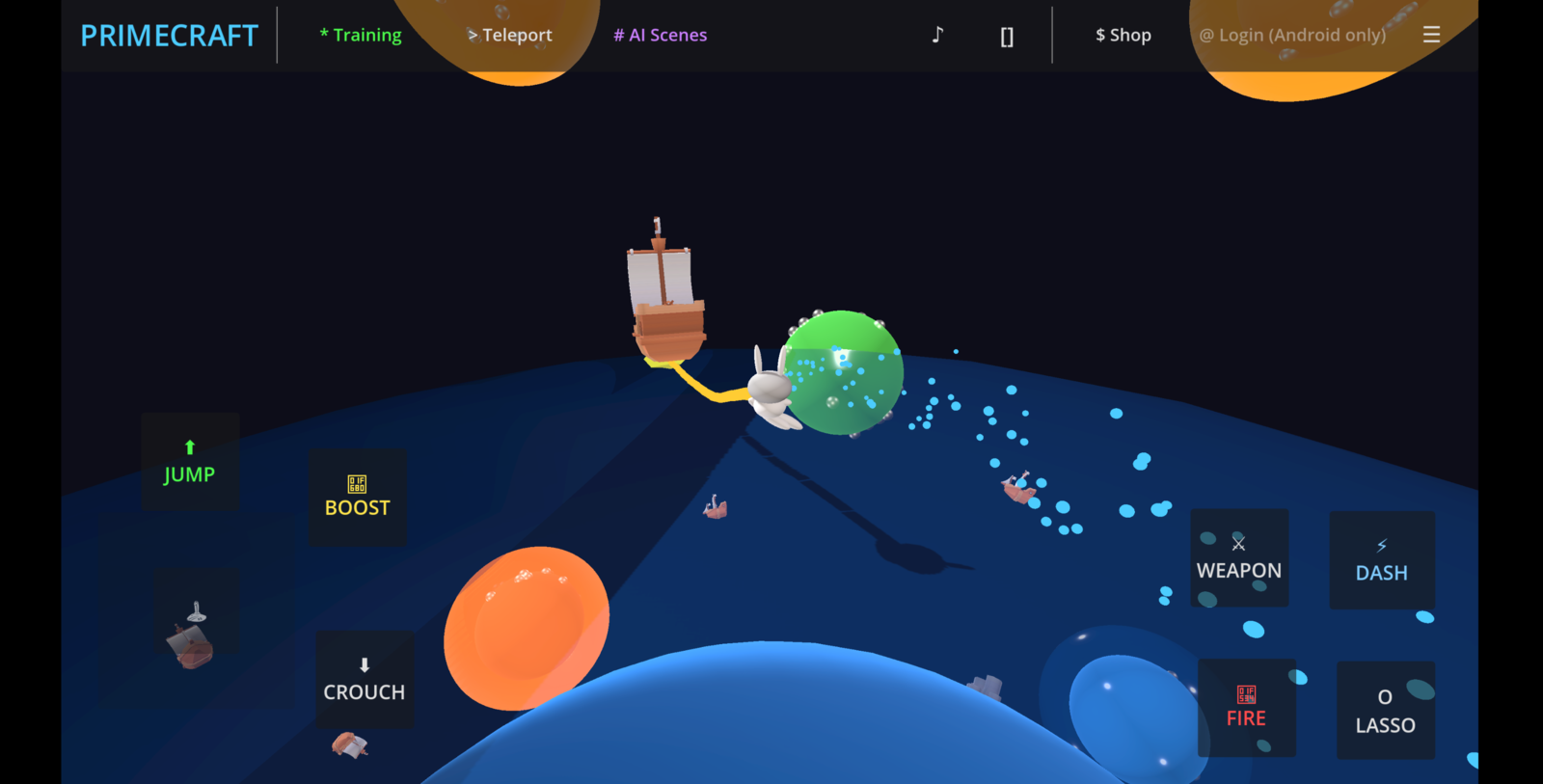

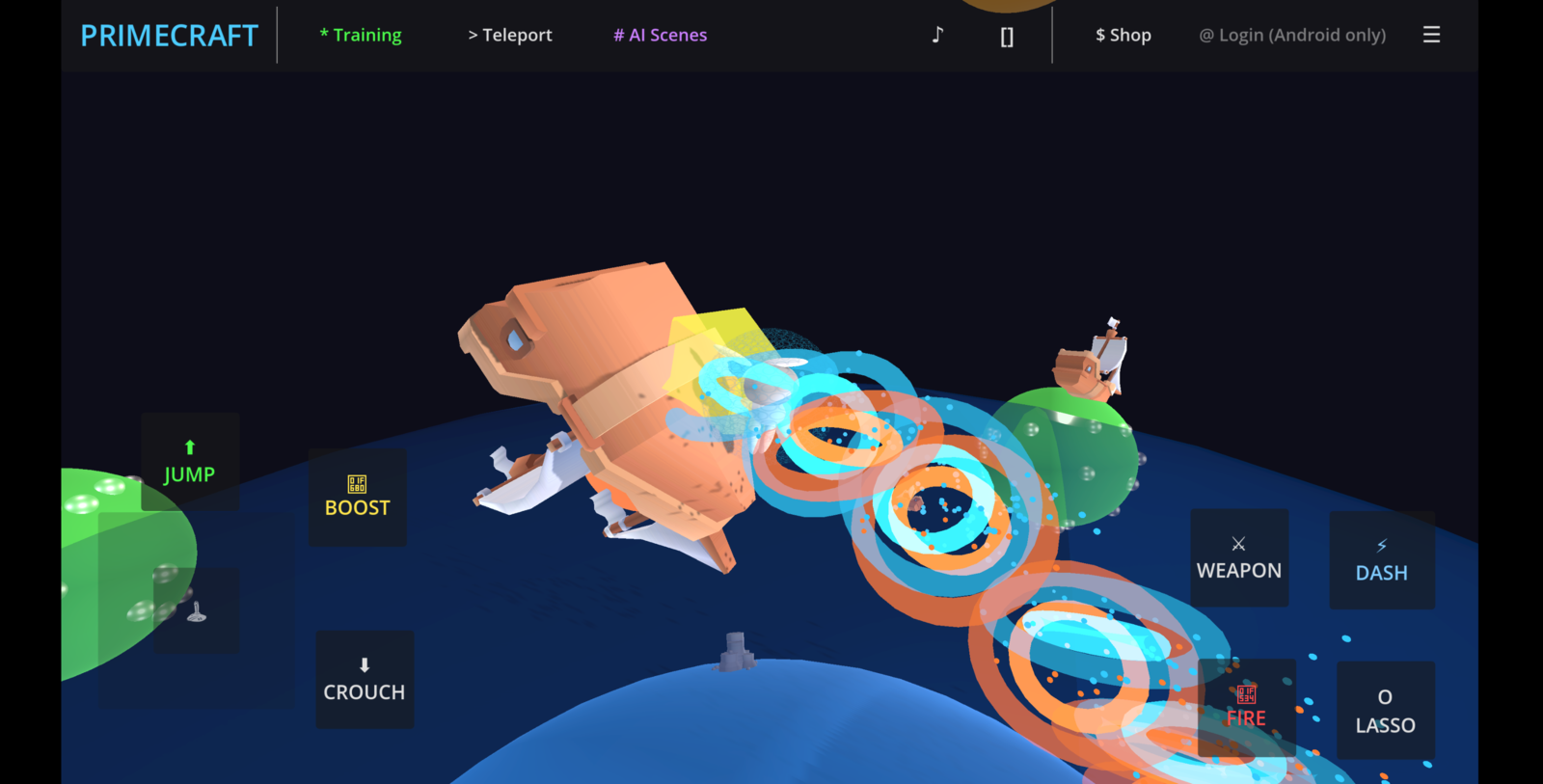

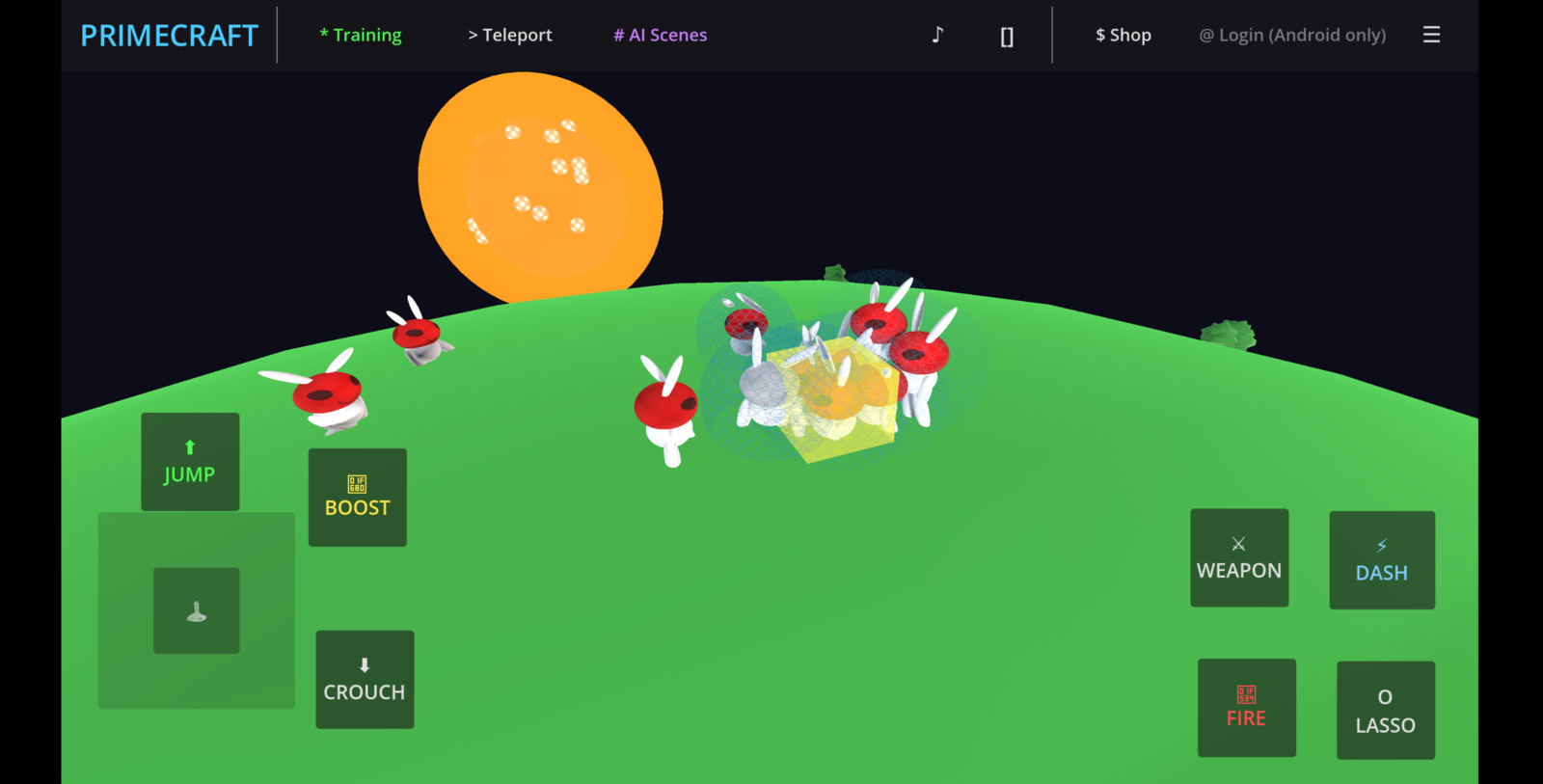

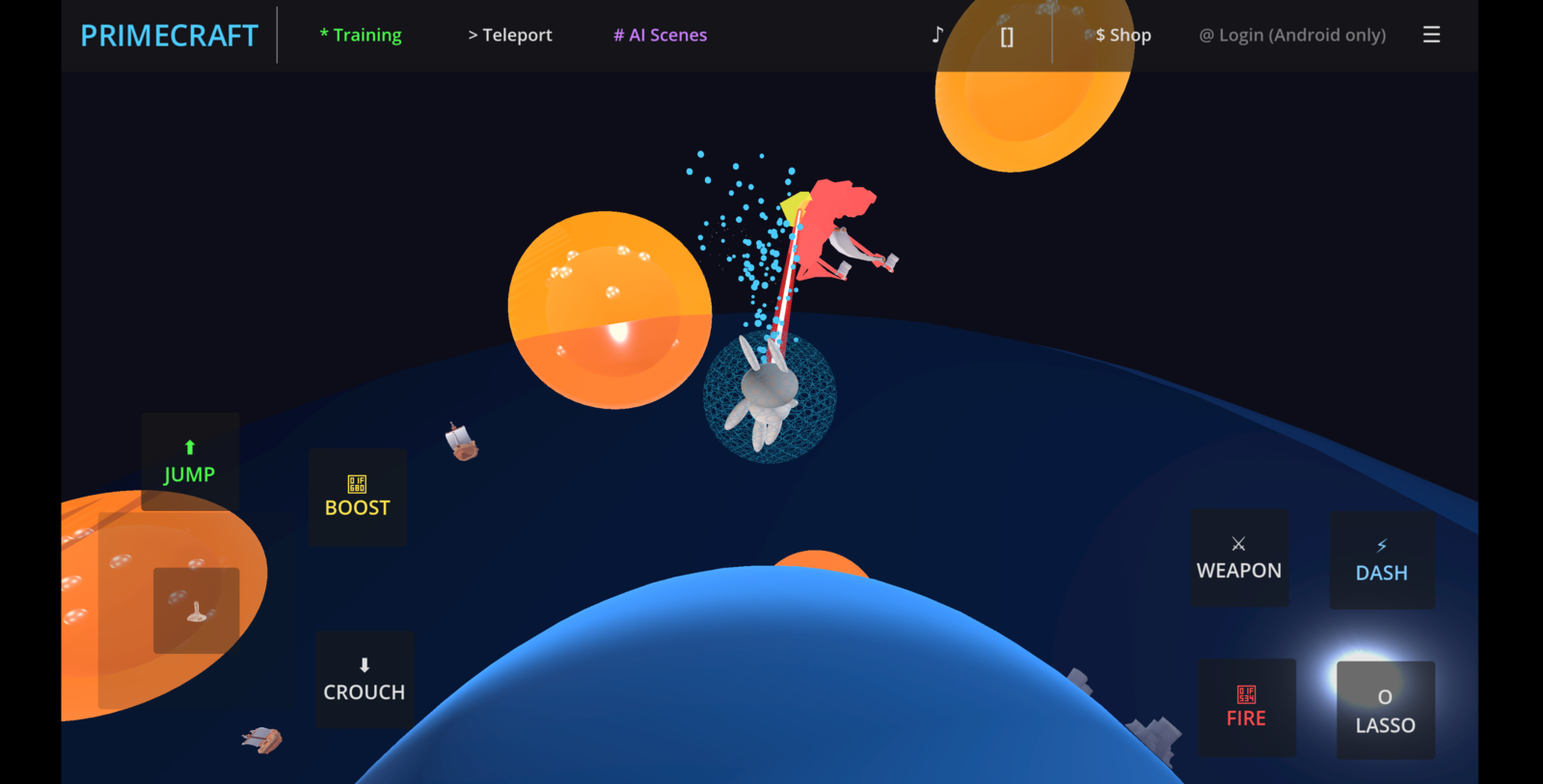

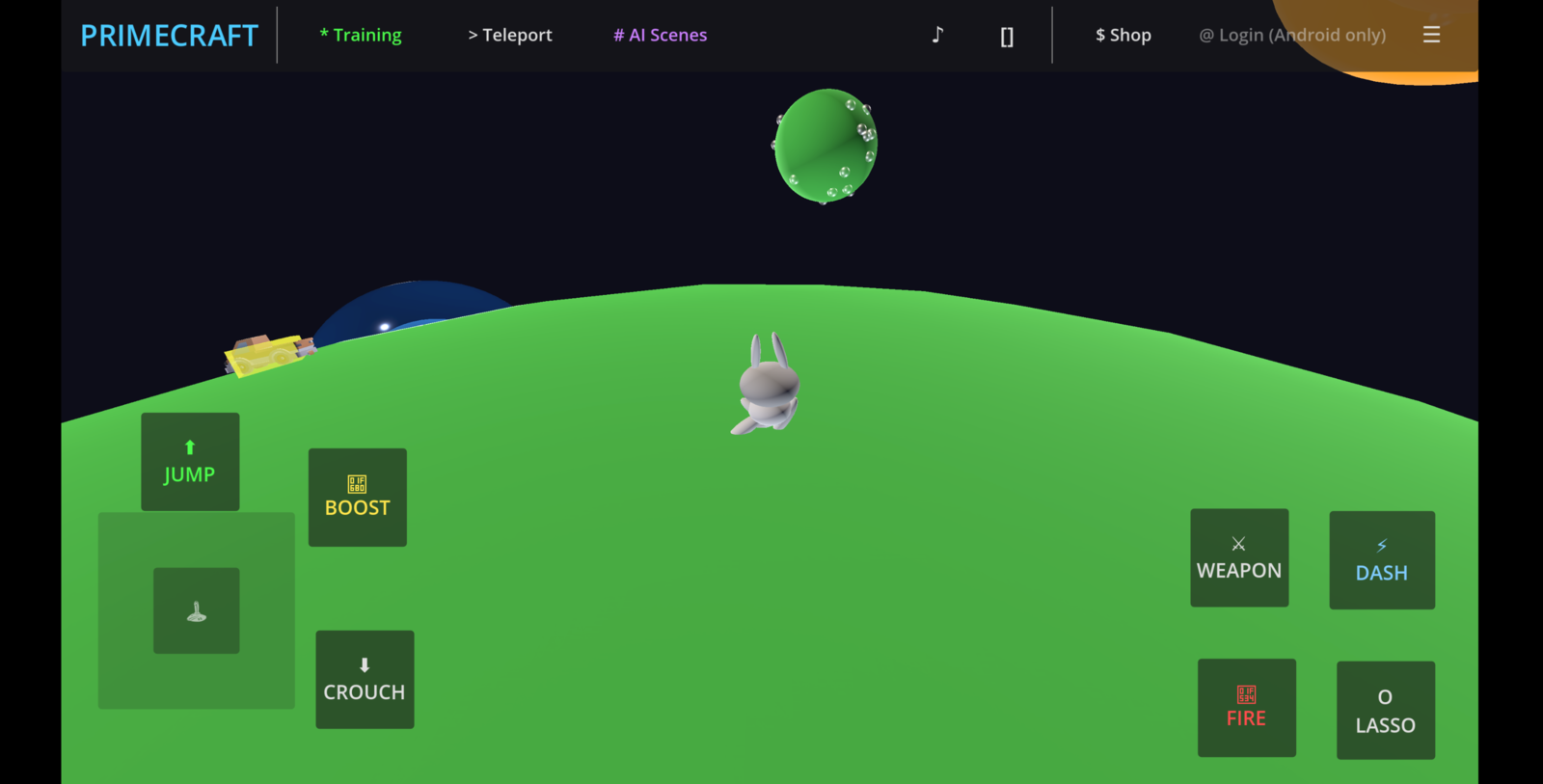

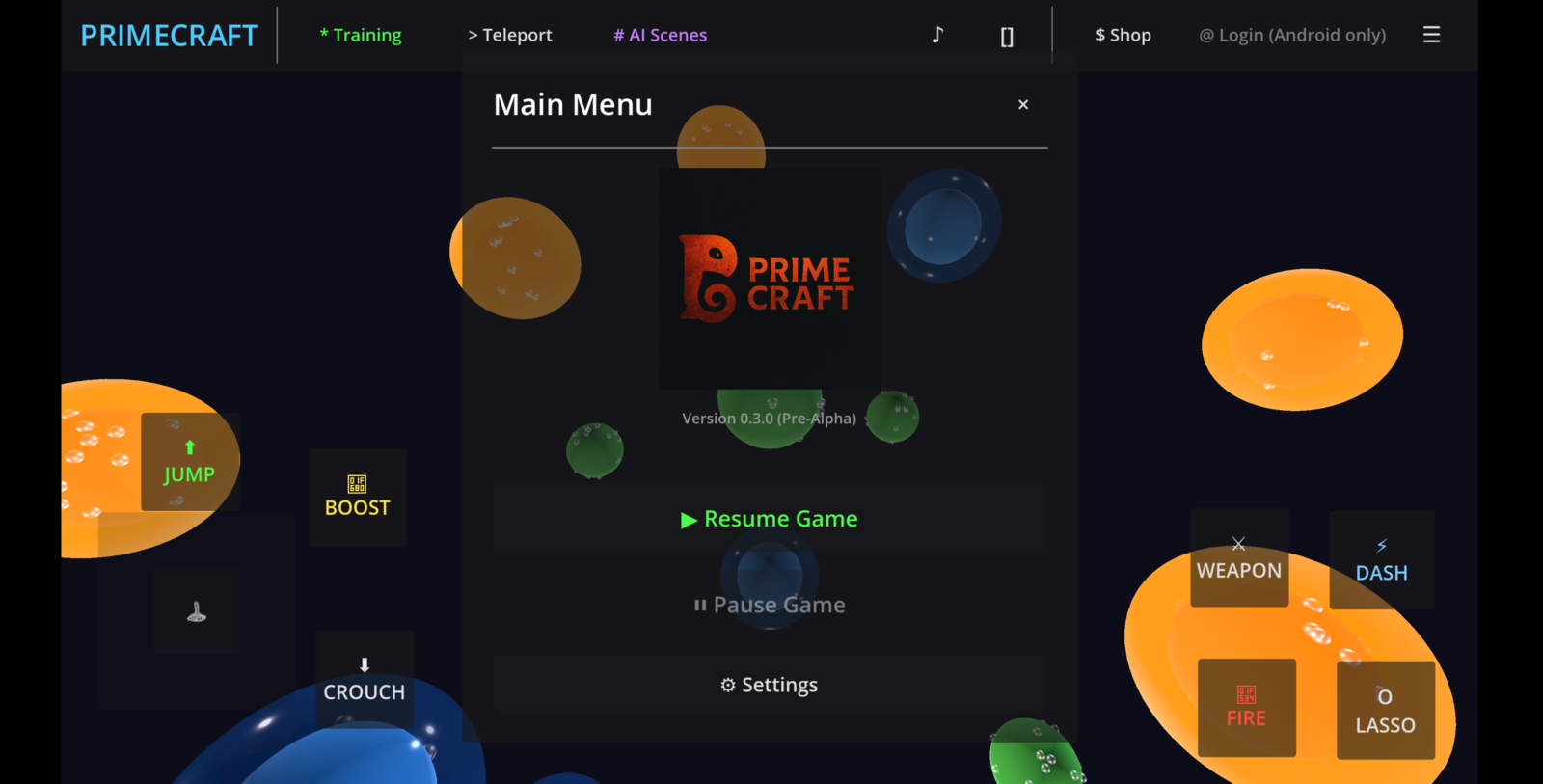

Primecraft Gallery

A few moments from the native runtime — high frame rates, zero drift.

How it works

One pipeline from authoring to AI to deployment.

Author

Design in your Lab

Sketch scenes, physics, and controllers with JSON blueprints. Keep

everything versioned and portable.

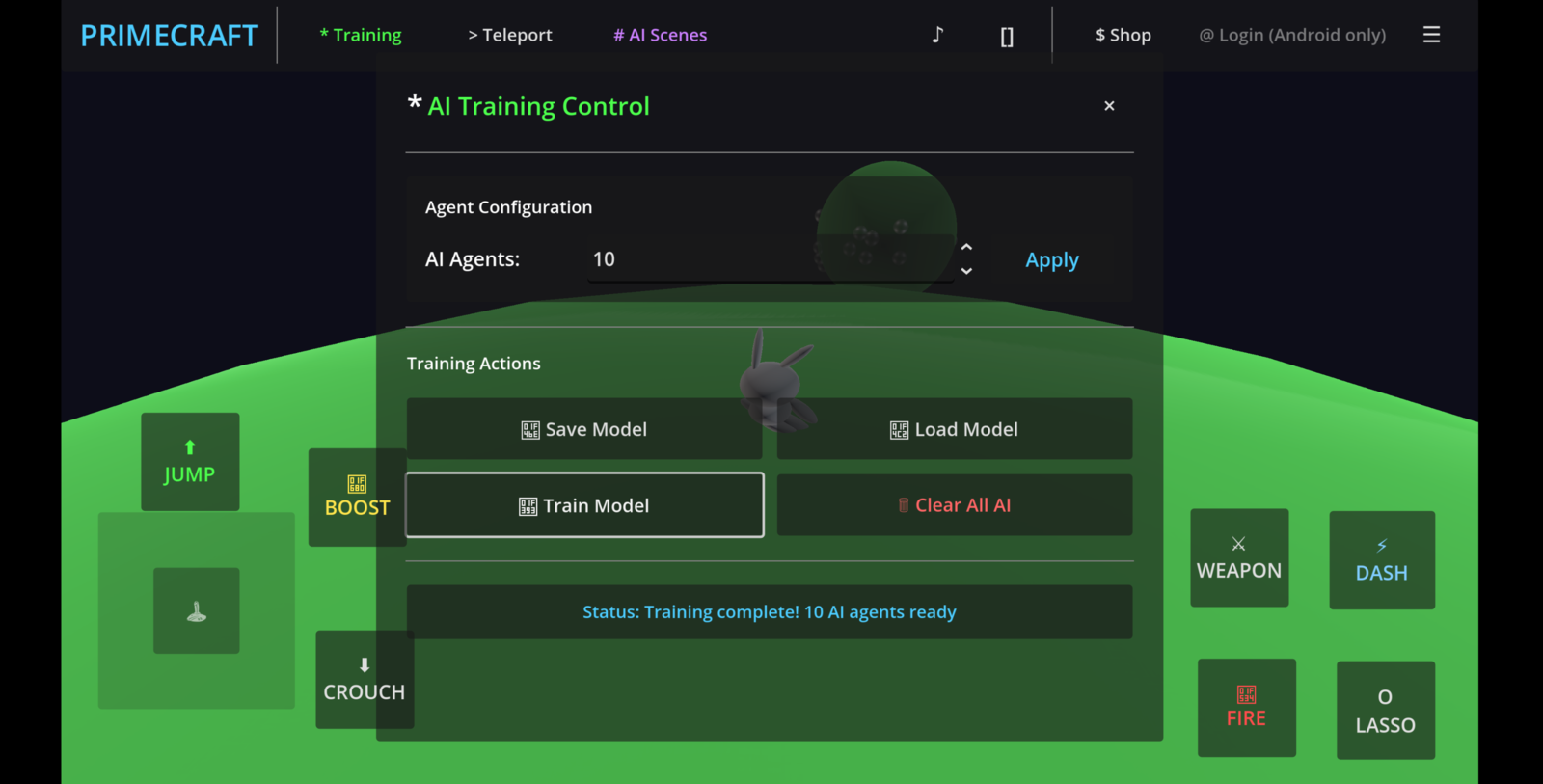

Train

LOOM AI behaviors

Train operators locally or in the browser with LOOM; export reflexes

and networks that stay in sync with your scenes.

Deploy

Run in Biocraft & Primecraft

WebGPU studio for instant iteration; native runtime for shipping,

tournaments, and zero-drift QA.

Share

Publish once

Publish scenes and assets publicly; anyone can pull JSON over the API

or launch the interactive view.

Random scenes from the lab

A fresh shuffle of published scenes every load. Click through to open.

ragdoll-falldown

By @sam

isthisabearordog

By @sam

bear_or_dog

By @sam

reflex-sequence-lets-go-bowl

By @sam

bear-or-dog

By @sam

something_random

By @sam

throw_down

By @sam

playground

By @sam

open-the-gates

By @sam

splat

By @sam

reflex-automation-example

By @sam

Treasure Hunt Explorer: Q-Learning Maze

By @ailearner